Tech-Today

Logstash is an open source tool for collecting, parsing and storing logs/data for future use.

Kibana is a web interface that can be used to search and view the logs/data that Logstash has indexed. Both of these tools are based on Elasticsearch, which is used for storing logs.

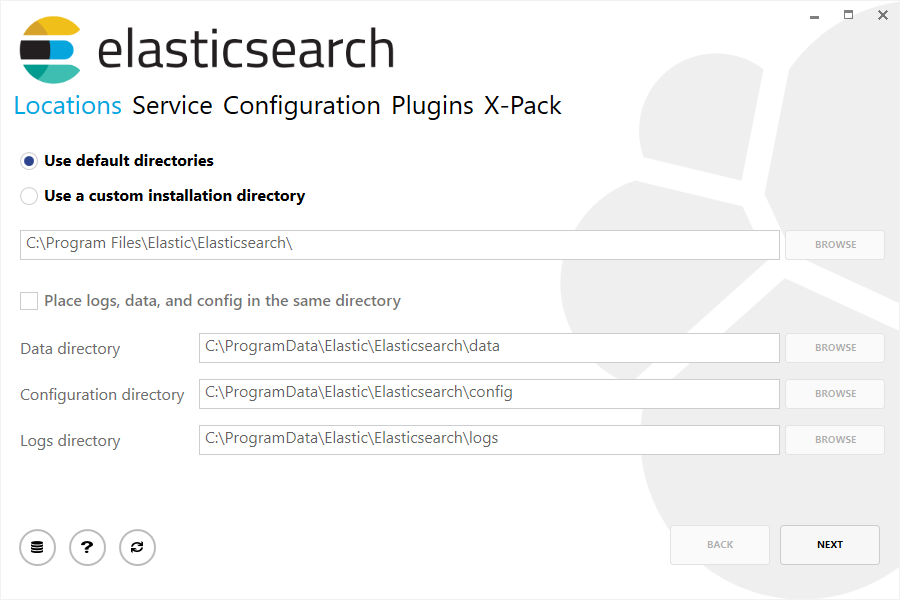

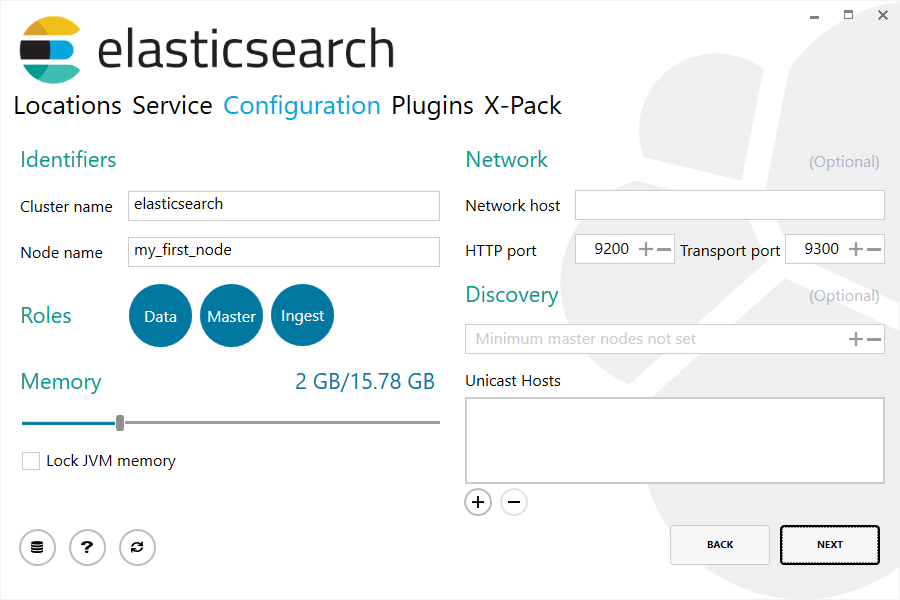

For configuration, simply leave the default values:

For configuration, simply leave the default values:

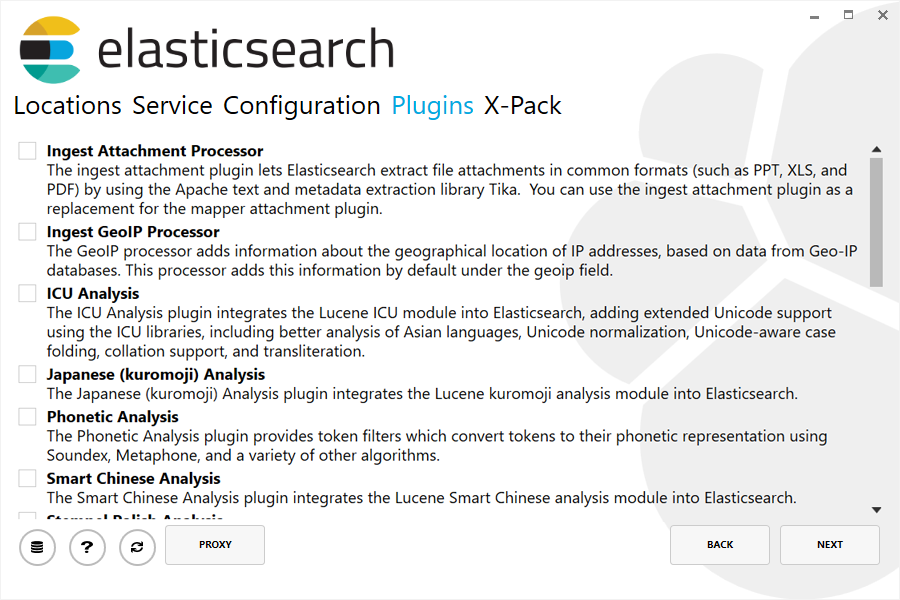

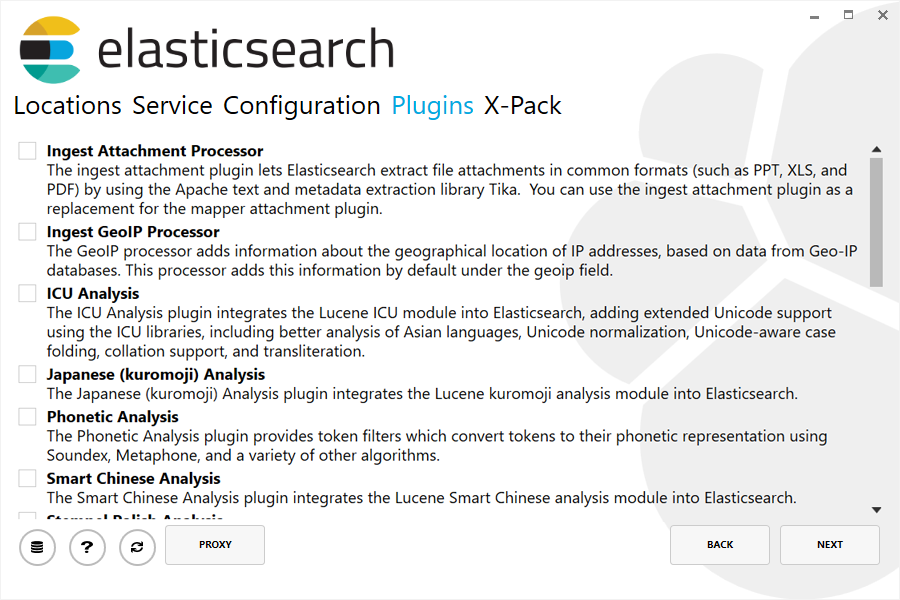

Uncheck all plugins to not install any plugins:

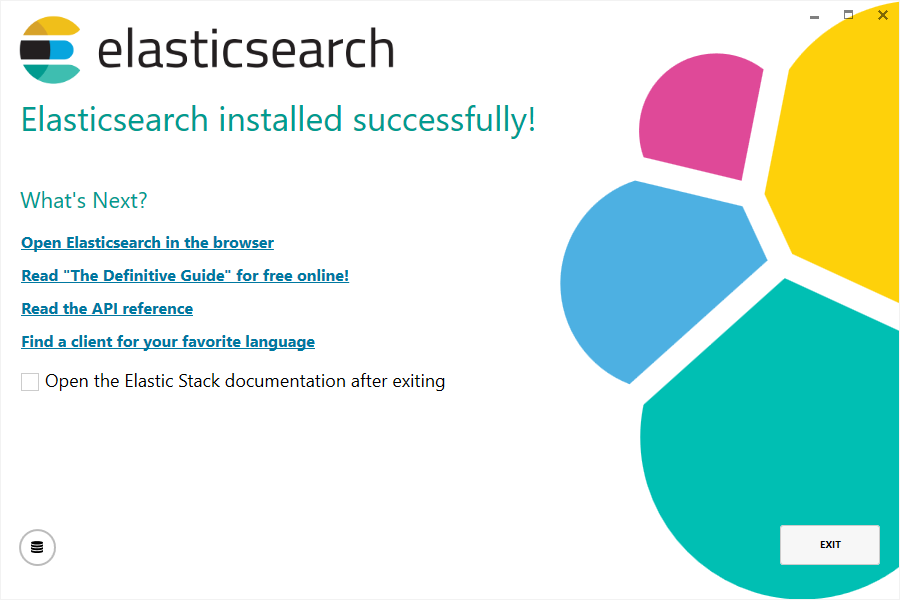

After clicking the install button, Elasticsearch will be installed:

To check if the Elasticsearch is running, open command prompt and type "services.msc" and look for Elasticsearch. You should see the status that it is 'Running'.

Or simply download the zipped file from https://www.elastic.co/downloads/elasticsearch.

The runnable file is located at bin\logstash.bat.

The runnable file is located at bin\logstash.bat.

- Apache Kafka

1.) Overview Apache Kafka is a distributed streaming platform. It is used for building real-time data platforms and streaming applications. In this blog, we will discuss how to install Kafka and work on some basic use cases. This article was created using...

- Enable Https / Ssl For Wildfly

Here are the steps I run through to enable SSL / HTTPS for Wildfly 14. Notice that instead of generating a key / certificate pair we instead use a special type of container for java which is a keystore. A keystore is a single file that contains both the...

- Run Wildfly And Postgresql In Docker

Docker is a great tool to simulate a development environment. Not only that but it also makes that environment portable by having a docker/docker-compose configuration file. And that is what this blog is all about. We will launch a pre-configured docker...

- How To Invoke A Jenkins Build From Assembla On Git Push

This tutorial will try to configure a jenkins job to run when a git push is done on Assembla. This tutorial assumes that your server is running in Ubuntu 12.04.2. Requirements: You must have an assembla account with admin privilege.An external server...

- Persist Jms Message In A Database In Glassfish

This tutorial will guide you on how to configure Java Messaging Service in Glassfish to store JMS message in a postgresql database. What you need (configured and running): 1.) Glassfish 3.1.2.2 2.) Postgresql 9.1 3.) JMS Broker (integrated with Glassfish)...

Tech-Today

Setting Up Elk and Pushing Relational Data Using Logstash JDBC Input Plugin and Secrets Keystore

Introduction

In this tutorial, we will go over the installation of Elasticsearch. We will also show you how to configure it to gather and visualize data from a database.Logstash is an open source tool for collecting, parsing and storing logs/data for future use.

Kibana is a web interface that can be used to search and view the logs/data that Logstash has indexed. Both of these tools are based on Elasticsearch, which is used for storing logs.

Our Goal

The goal of this tutorial is to set up Logstash to gather records from a database and set up Kibana to create a visualization.

Our ELK stack setup has three main components:

- Logstash: The server component of Logstash that processes database records

- Elasticsearch: Stores all of the records

- Kibana: Web interface for searching and visualizing logs.

Install Elasticsearch

Using the MSI Installer package. The package contains a graphical user interface (GUI) to guides you through the installation process.

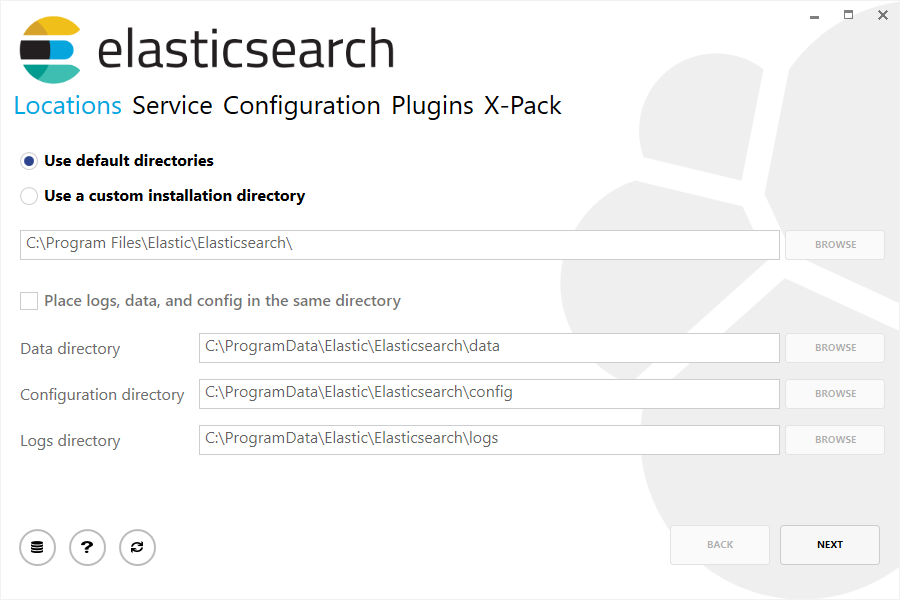

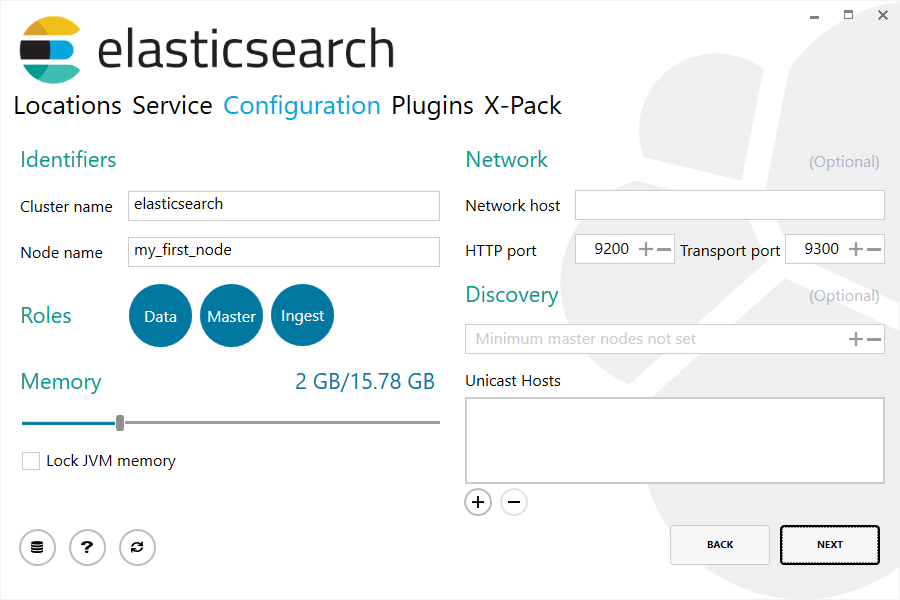

Then double-click the downloaded file to launch the GUI. Within the first screen, select the deployment directories:

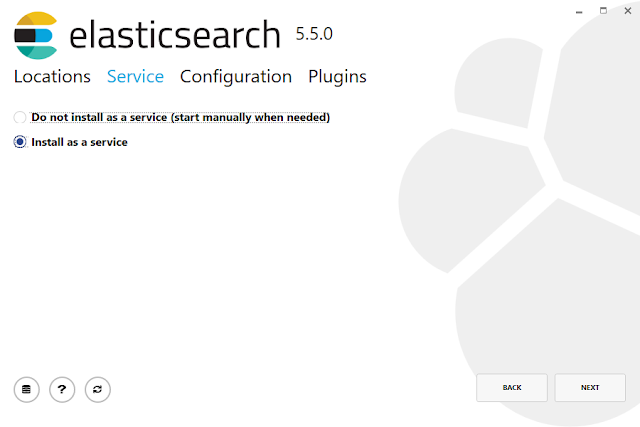

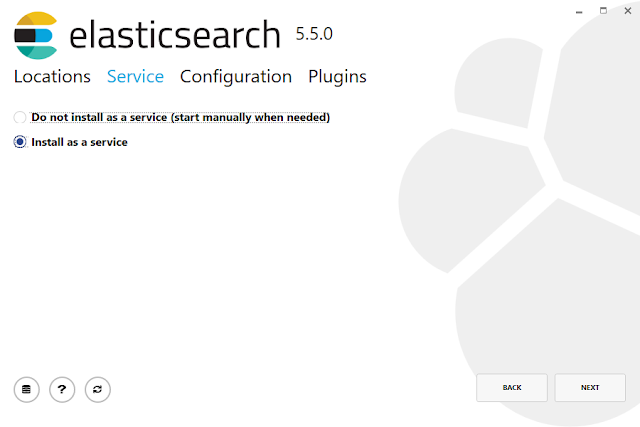

Then select whether to install as a service or start Elasticsearch manually as needed. Choose install as a service:

Uncheck all plugins to not install any plugins:

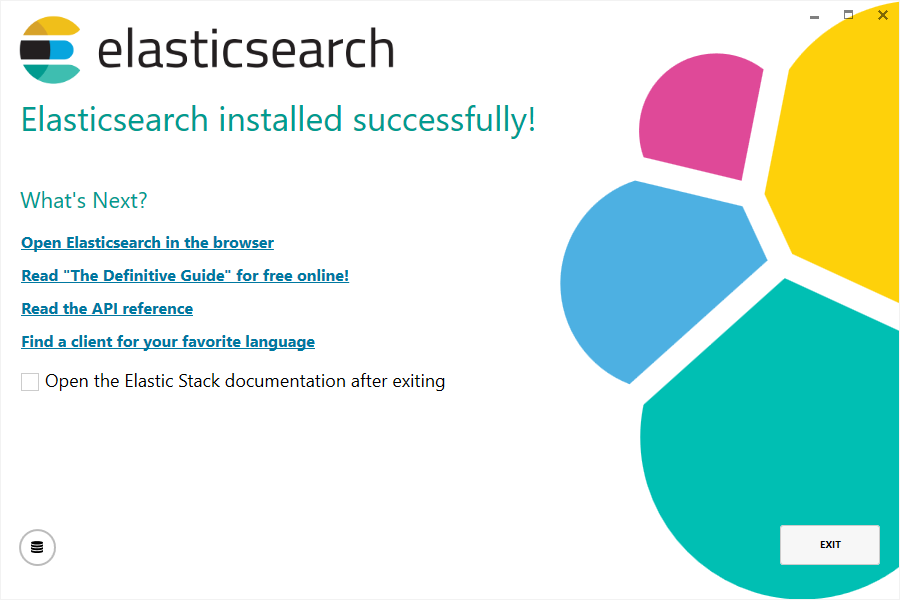

After clicking the install button, Elasticsearch will be installed:

To check if the Elasticsearch is running, open command prompt and type "services.msc" and look for Elasticsearch. You should see the status that it is 'Running'.

Or simply download the zipped file from https://www.elastic.co/downloads/elasticsearch.

Install Kibana

You can download the Kibana https://www.elastic.co/downloads/kibana.

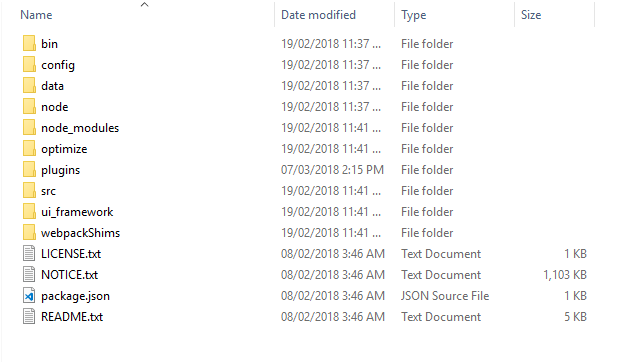

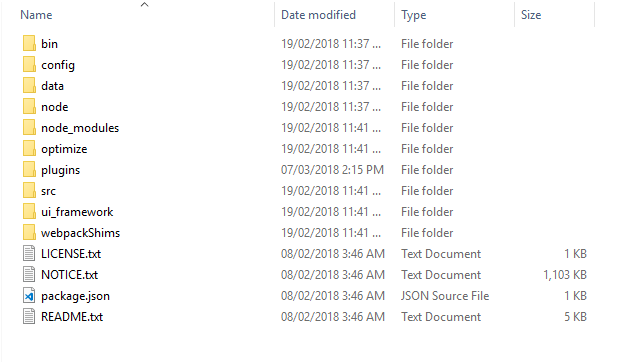

After downloading Kibana and unzipping the file you will see a folder structure as below.

The runnable file is located at bin\kibana.bat.

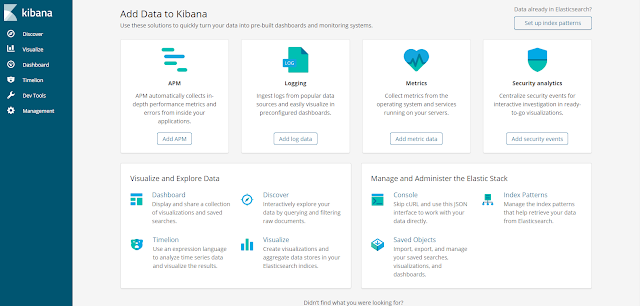

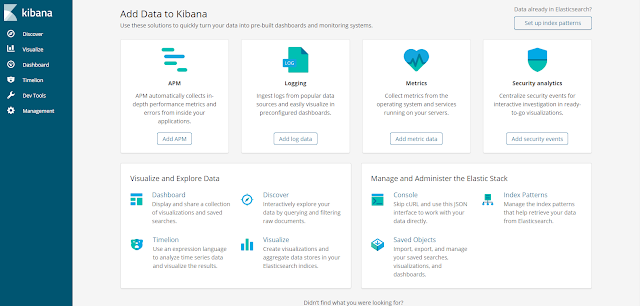

To test, start kibanat.bat and point your browser at http://localhost:5601 and you should see a web page similar below:

Install Logstash

You can download the Logstash https://www.elastic.co/products/logstash.

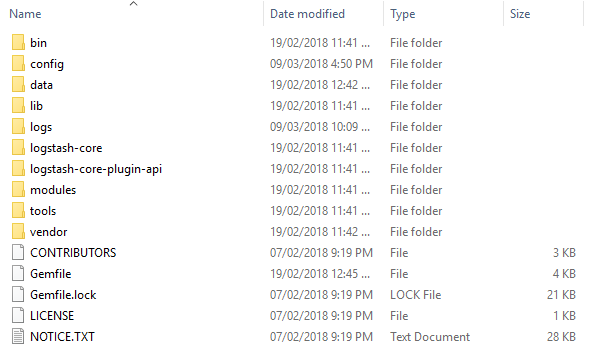

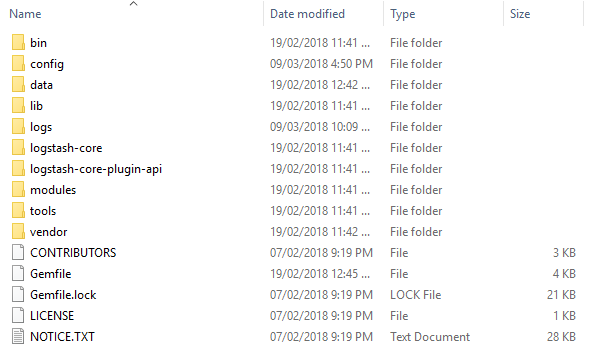

After downloading Logstash and unzipping the file you will see a folder structure as below.

Inserting Data Into Logstash By Using Select Data from Database

Elastic.co has a good blog regarding this topic that you can visit https://www.elastic.co/blog/logstash-jdbc-input-plugin.Secrets keystore

When you configure Logstash, you might need to specify sensitive settings or configuration, such as passwords. Rather than relying on file system permissions to protect these values, you can use the Logstash keystore to securely store secret values for use in configuration settings.

Create a keystore

To create a secrets keystore, use the create:

bin/logstash-keystore create

Add keys

To store sensitive values, such as authentication credentials, use the add command:

bin/logstash-keystore add PG_PWD

When prompted, enter a value for the key.

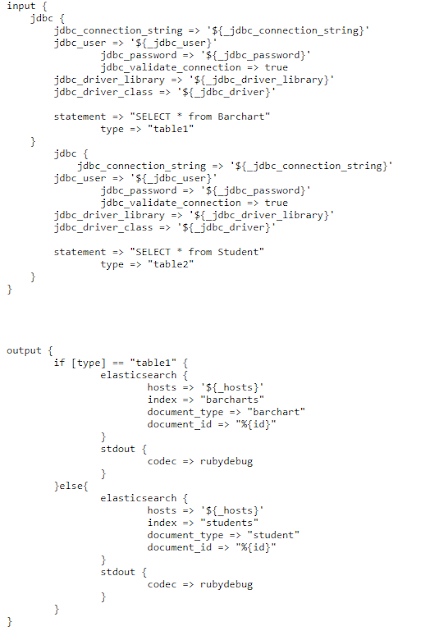

We could use keystore to store values jdbc_connection_string, jdbc_user, jdbc_password, etc.

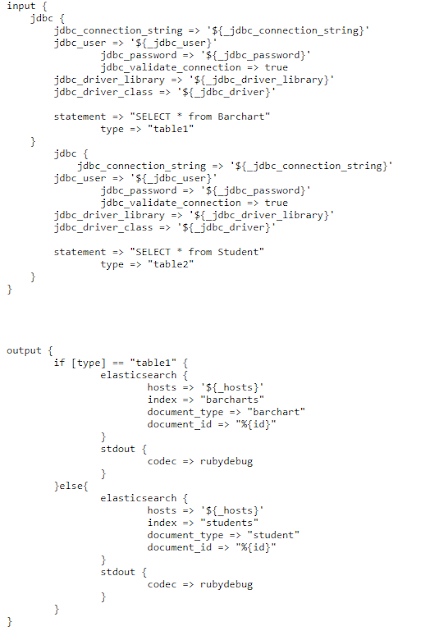

For simplicity, an underscore was added for referencing the keys. See below for sample config file.

Let's say that a Postgresql table was changed after pushing the data to Elasticsearch. Those changes will not be present in Elasticsearch. To keep Elasticsearch updated we need to update it by running logstash with the configuration below.

In this configuration we are running logstash every second, of course, you wouldn't do that :-) Normally we run per day, week, month, etc. Can be configure depending on your needs.

References:

- Secrets keystore

- Insert Into Logstash Select Data From Database

- Pushing Relational Data to Elasticsearch using Logstash JDBC input plugin

- Apache Kafka

1.) Overview Apache Kafka is a distributed streaming platform. It is used for building real-time data platforms and streaming applications. In this blog, we will discuss how to install Kafka and work on some basic use cases. This article was created using...

- Enable Https / Ssl For Wildfly

Here are the steps I run through to enable SSL / HTTPS for Wildfly 14. Notice that instead of generating a key / certificate pair we instead use a special type of container for java which is a keystore. A keystore is a single file that contains both the...

- Run Wildfly And Postgresql In Docker

Docker is a great tool to simulate a development environment. Not only that but it also makes that environment portable by having a docker/docker-compose configuration file. And that is what this blog is all about. We will launch a pre-configured docker...

- How To Invoke A Jenkins Build From Assembla On Git Push

This tutorial will try to configure a jenkins job to run when a git push is done on Assembla. This tutorial assumes that your server is running in Ubuntu 12.04.2. Requirements: You must have an assembla account with admin privilege.An external server...

- Persist Jms Message In A Database In Glassfish

This tutorial will guide you on how to configure Java Messaging Service in Glassfish to store JMS message in a postgresql database. What you need (configured and running): 1.) Glassfish 3.1.2.2 2.) Postgresql 9.1 3.) JMS Broker (integrated with Glassfish)...